Why Europe’s AI Future Lies in the Edge, Not the Cloud

image: ASML

For the past three years, the narrative surrounding Europe’s position in the global artificial intelligence race has been one of anxiety. The headlines are familiar: Europe has missed the boat. Europe has no Google, no OpenAI, no NVIDIA. While the United States builds the "brains" of the future in massive gigawatt-scale data centers in Virginia and Iowa, Europe regulates, deliberates, and falls behind.

This seems coherent on the surface, but it oversimplifies the potential of AI and the direction of Europe.

As we close 2025, there are some clear facts on the table. Europe is not “failing to compete” in the race to build massive Foundation Models; it is physically and economically disqualified from it. But in conceding the battle for "Training," Europe may still be in a position to do well in the far larger, more durable economy of "Inference."

The future of AI is not a unipolar American moment but a bifurcation driven by the laws of thermodynamics.

Why Training Left Europe

To understand why Europe cannot be the home of the next GPT-6, you don't need to look at code; you need to look at utility bills.

Training a frontier AI model is, at its core, an energy arbitrage trade. It requires concentrating roughly 100,000 H100 GPUs in a single location and running them at maximum load for months. This requires gigawatts of reliable baseload power.

In 2025, the mathematics of this are brutal. Industrial electricity in the US averages roughly $48 per MWh, subsidized by cheap natural gas and a deregulated grid. In the industrial heartlands of Europe, that same electron costs between $90 and $100 per MWh. When you multiply that delta by the terawatt-hours required for training, the business case for a "European OpenAI" evaporates.

Furthermore, the capital expenditure (Capex) gap has become insurmountable. This year alone, US technological sovereignty was purchased with over $200 billion in hyperscaler infrastructure spending. The entire European venture capital ecosystem deploys less than a quarter of that annually across all sectors.

Europe cannot build the "Brain." The energy grid is too expensive, the land is too scarce, and the capital pools are too shallow. If the race were only about Training, Europe would indeed be finished.

From Building the Brain to Using It

However, the AI economy is not a monolith. It has two distinct distinct phases: Training (Capital Expenditure) and Inference (Operational Expenditure).

Training is the act of creating the model; e.g. reading the internet to learn patterns. It happens once. Inference is the act of using the model; generating an answer, steering a car, or analyzing a medical scan. It happens billions of times a day.

While the US has locked up the market for Training, becoming the world’s "Intelligence Refinery," in competition with China, Europe is well positioned to do well in the market for Inference.

Inference does not require a nuclear power plant. It requires efficiency, latency, and trust. These are the qualities that define Europe’s industrial DNA.

Where Physics Meets Intelligence

The primary driver of the European AI strategy is not the consumer asking a chatbot for a poem; it is the machine.

Consider a BMW factory in Munich or a Vestas wind turbine in the North Sea. These assets generate massive amounts of data and require split-second decisions. They cannot afford the latency of sending data to an Azure server in Virginia and waiting for a response. Furthermore, they often cannot rely on a consistent internet connection.

This reality has birthed the "Edge AI" sector—running intelligence locally on the device. This is where the battle moves from "Brute Force" (US) to "Precision Engineering" (Europe).

Leading this charge are companies like Axelera AI (Eindhoven/Milan). While NVIDIA dominates the energy-hungry training chips, Axelera is capturing the market for "Inference Silicon"; chips that run powerful AI models using a fraction of the power (under 5 watts). This is the "NVIDIA-hedge": not bigger and hotter, but smaller and cooler. It is the only way to put a brain inside a drone, a camera, or a robotic arm.

The "Linux of AI"

If hardware is one half of the inference equation, software is the other. Here, the French champion Mistral AI has executed one of the most brilliant strategic pivots of the decade.

While OpenAI and Google fight "The War of the Trillions" (trillions of parameters), Mistral realized that for 90% of business use cases, you don't need a huge general intelligence. You need a reliable, efficient worker.

Mistral’s focus on the Mistral Small and Ministral family of models (3 billion to 8 billion parameters) has effectively created the "Linux of AI." These models are "Open Weight," meaning they can be downloaded and run on a local server. They are the perfect size for a German mid-sized manufacturer ("Mittelstand") that wants to automate its supply chain but refuses to upload its proprietary data to a US cloud.

By creating the standard operating system for the Edge, Mistral ensures that while the US might own the cloud, Europe owns the on-premise reality.

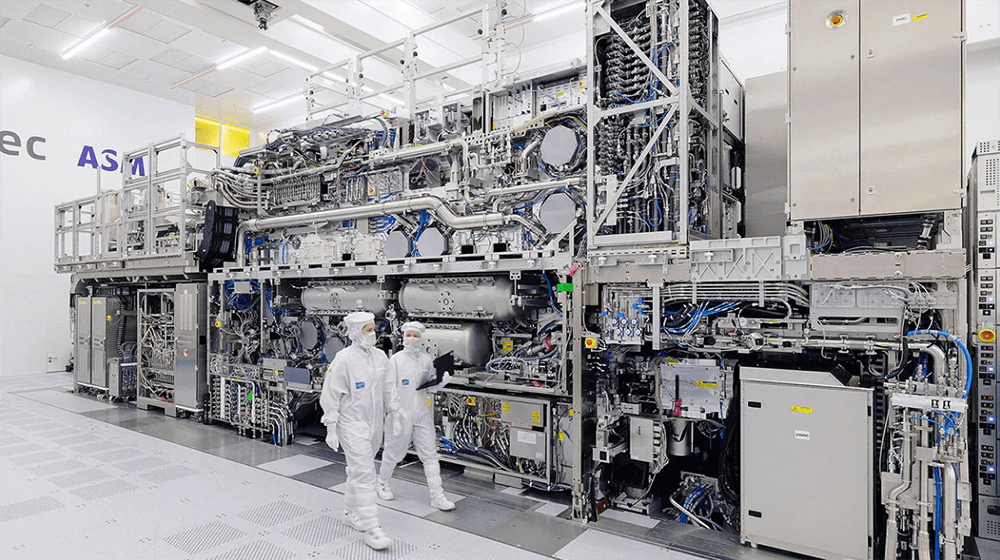

Mistral & ASML Validation

If you need an example to show that Europe’s future lies in "Industrial AI," consider ASML’s strategic €1.3 billion investment in Mistral. ASML, the monopoly supplier of the world’s lithography machines, is not partnering with Mistral for access to a chatbot. It is integrating Mistral’s "Ministral" edge models directly into the lithography fabrication process. ASML has reportedly begun replacing generic cloud models with local, fine-tuned Mistral instances to handle visual pattern recognition and predictive maintenance on the factory floor. This is the "Inference Thesis" in action: the most valuable hardware company in Europe (ASML) protecting its IP by using the most efficient software company in Europe (Mistral) to run "Air-Gapped" intelligence where the data never leaves the cleanroom.

Privacy as a Feature

The final pillar of Europe’s inference dominance is regulatory. For years, Silicon Valley viewed GDPR and the EU AI Act as stifling burdens. In the Inference economy, they are competitive moats.

European institutions (hospitals, defense contractors, banks) are increasingly allergic to US data centers. The US CLOUD Act, which theoretically allows American intelligence agencies to subpoena data stored by US companies anywhere in the world, is a non-starter for strategic European assets.

This has created a booming market for "Sovereign Inference." European integrators (like SAP, Aleph Alpha, and defense firms like Helsing) are building "Air-Gapped" AI solutions. They take a specialized model (like Mistral), install it on a secure server in the client’s basement, and cut the cord to the internet.

In this environment, "Performance" is secondary to "Residency." A US model might be 5% smarter, but if it requires data exfiltration to California, it is disqualified. This protectionism-by-privacy ensures that the domestic market for critical AI applications remains in European hands.

A Dual-Engine World

As we look toward 2030, the map of global AI is not a unipolar American empire. It is a bifurcated system with two distinct engines.

The United States will remain the Training Hub. It will burn the energy, spend the trillions in Capex, and push the boundaries of Artificial General Intelligence (AGI). It will be the "Cloud."

Europe will become the Inference Hub. It will take those breakthroughs, distill them into efficient, small-scale models, and embed them into the physical world of robotics, manufacturing, and defense. It will be the "Edge."

This is not a story of defeat. It is a story of specialization. Europe has realized that in a gold rush, it is profitable to sell the shovels, but it is even more profitable to own the machines that use the gold to build things. By abandoning the impossible race for "Training," Europe has secured its future in the economy of "Doing."

The future of European tech is not in the data center; it is in the factory, the hospital, and the grid.