The Algorithmic Backbone: How AI is Rewiring the DNA of Finance

For years, "Artificial Intelligence in Finance" was a phrase largely reserved for innovation labs and tech conferences—a futuristic promise of efficiency that rarely left the pilot stage. In 2026, that era of experimentation has abruptly ended. We are now witnessing the industrialization of intelligence. The most critical development in the global economy is no longer the mere existence of AI, but its aggressive operationalization into the core nervous system of banking, payments, and risk management. As algorithms begin to make decisions on loan approvals, fraud detection, and capital allocation, the industry is navigating a high-stakes collision between exponential technological capability and a fragmented global regulatory landscape.

This represents a fundamental re-platforming of the financial sector. We are moving from a world of static, rules-based systems to dynamic, probabilistic engines that learn in real-time. This transition is unlocking immense value (potentially $1 trillion annually by 2030) but it is also forcing a complete rethinking of governance, liability, and the very definition of banking.

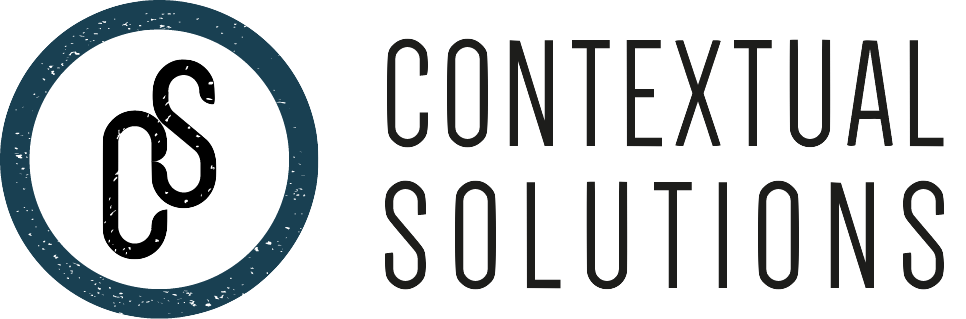

The Productivity Engine

The driving force behind this adoption is a stark economic reality: efficiency is no longer optional. In an environment of compressed margins and high competition, AI has become the primary lever for survival. By early 2026, the metrics were undeniable. Financial institutions that have fully integrated generative and predictive AI into their workflows are reporting operational cost reductions of nearly 20%.

This isn't just about automated customer support chatbots. The integration has gone much deeper. Major U.S. banks like JPMorgan Chase and Goldman Sachs have effectively doubled their AI capabilities every 100 days throughout 2025, moving beyond simple automation to complex decision-making. AI agents now draft initial credit memos, predict liquidity shortfalls with unprecedented accuracy, and generate regulatory compliance reports in seconds rather than weeks.

According to the Finance Trends 2026 report, 46% of financial services firms have now implemented AI to a "high degree" across their operations, compared to just 28% of general corporations. This disparity highlights a crucial trend: finance is becoming a tech-first industry faster than the tech sector itself. The "human-in-the-loop" model remains the standard for high-stakes decisions, but the loop is tightening. The machine does the heavy lifting; the human provides the final sign-off.

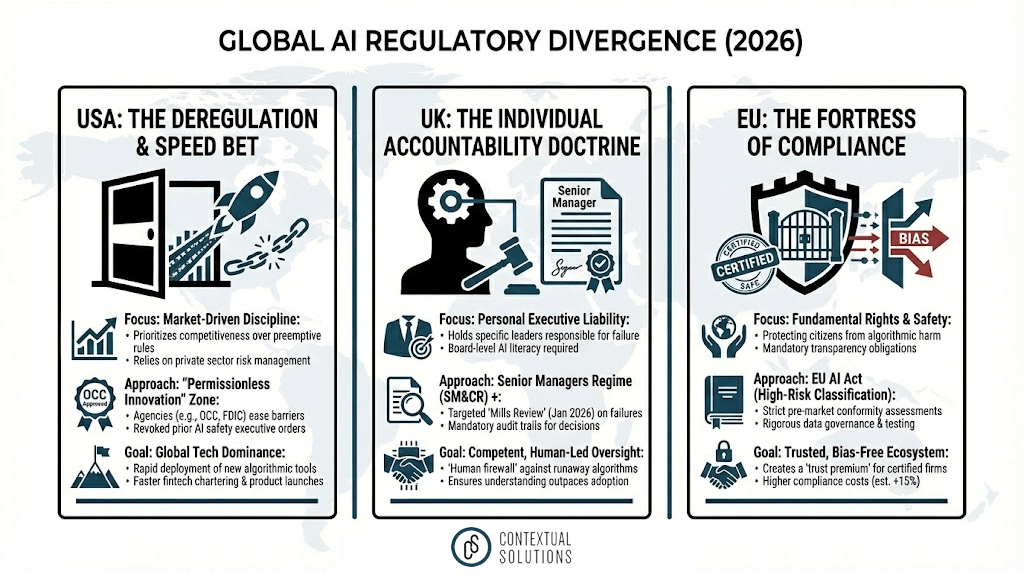

A Tale of Three Regimes

As the technology accelerates, the rules of the road are splintering. In 2026, we are seeing a "Great Divergence" in AI regulation, creating a complex geopolitical puzzle for multinational banks.

The United States: The Deregulation Bet Under the Trump administration, the U.S. has doubled down on a pro-innovation, deregulatory stance. Following the revocation of the previous administration's AI safety executive order in January 2025, federal agencies like the Office of the Comptroller of the Currency (OCC) and the FDIC have been directed to prioritize competitiveness. The prevailing philosophy is that excessive guardrails could stifle American dominance in AI. Consequently, U.S. banks enjoy a "permissionless innovation" environment, allowing them to deploy new algorithmic tools rapidly. While this has spurred a boom in fintech charters and product launches, it has also transferred significant risk management responsibility directly to the private sector, relying on market discipline rather than government mandates to check algorithmic bias or failure.

The European Union: The Fortress of Compliance In sharp contrast, the European Union has activated the world's most stringent AI governance framework. The EU AI Act, which entered a critical implementation phase in August 2026 for financial services, classifies many financial AI applications—such as credit scoring and life insurance risk assessment—as "high-risk." This designation triggers mandatory conformity assessments, rigorous data governance requirements, and strict transparency obligations. For a bank in Paris or Frankfurt, deploying a credit algorithm now requires a paper trail proving the system is free of prohibited bias and is fully explainable. While this has increased compliance costs by an estimated 15%, it has also created a "trust premium" for European fintechs, who market their "certified safe" AI as a competitive advantage.

The United Kingdom: The Accountability Doctrine The UK has carved out a middle path. Avoiding a sweeping new "AI Law," the Financial Conduct Authority (FCA) has instead leaned into its Senior Managers & Certification Regime (SM&CR). The focus is on individual accountability. If an algorithm discriminates against a borrower, the regulator doesn't just fine the bank—it looks for the specific executive who signed off on the model. This "human firewall" approach, bolstered by the Mills Review launched in January 2026, forces boardrooms to become deeply literate in AI risks, ensuring that technology never outpaces the ability of leadership to understand it.

The Emerging Market Leap

Perhaps the most exciting story of 2026 is happening away from the traditional financial capitals. In emerging markets, AI is not just optimizing old systems; it is building entirely new ones. Nigeria stands out as a global case study in this leapfrog effect.

According to a February 2026 report by the Central Bank of Nigeria (CBN), a staggering 87.5% of Nigerian fintech companies now utilize AI specifically for fraud detection. In a market where trust is the currency of growth, AI has become the immune system of the digital economy. This robust defense layer has supported an explosion in volume, with Nigerian financial institutions processing nearly 11 billion instant payment transactions in 2024—a figure that dwarfs many developed nations.

Furthermore, AI is driving financial inclusion in ways previously thought impossible. With 88% of Nigerian adults reporting usage of AI chatbots, fintechs are leveraging these familiar interfaces to offer personalized micro-lending and financial advice to the unbanked. By analyzing alternative data points—such as mobile phone usage patterns rather than traditional credit scores—AI algorithms are extending credit to millions of citizens who were invisible to the legacy banking system. This is "embedded finance" in its purest form: invisible, intelligent, and inclusive.

The Ghost in the Machine

Despite the trillion-dollar upside, the integration of AI brings systemic risks that keep regulators awake at night. The primary concern is algorithmic bias. Without careful calibration, AI models trained on historical data can perpetuate—and automate—historical inequalities. For instance, a mortgage lending model might inadvertently "redline" entire neighborhoods based on proxy variables like zip codes or shopping habits, systematically denying credit to minority groups without a human ever making a conscious decision to discriminate.

In the U.S., where specific demographic data collection is often restricted to prevent discrimination, detecting this "invisible bias" is technically challenging. In contrast, the EU's mandate for "bias mitigation" forces firms to actively test for and correct these skew.

There is also the risk of herding behavior. If every major bank uses the same few foundation models (e.g., from OpenAI or Anthropic) to assess market risk, they may all decide to sell the same asset at the exact same moment during a crisis, triggering a catastrophic liquidity spiral. This "model monoculture" is a new form of systemic risk that traditional stress tests are only beginning to address.

“When the world trains its intelligence through a handful of systems, we lose not just diversity of thought, but the foundation of value itself.”

- Joseph Emerick, Cyber & Information Security Professional

The Era of the AI-Native Bank

As we look toward the rest of the decade, the divide between "traditional banks" and "fintechs" is evaporating. In 2026, every financial institution is becoming an AI company that happens to move money. The winners will not necessarily be those with the biggest balance sheets, but those with the best data governance and the most agile "human-machine" teams.

The regulatory fragmentation we see today, between the U.S. libertarian approach, the EU's rights-based framework, and the UK's accountability model, will likely persist. Global firms will be forced to become chameleons, adapting their AI strategies to local norms. However, the trajectory is clear: the invisible hand of the market is being digitized. As AI takes the wheel of global finance, the industry's challenge is to ensure that this powerful new engine remains under control, ethical, and aligned with the human economy it was built to serve.